How I Automatically Sync My Skool Members to Airtable Every Day

Keeping a daily list of my Skool members in Airtable has become a cornerstone of how I run automations, track subscriptions, and trigger workflows based on real membership state.

Skool doesn’t currently offer a public API for member exports, and manual CSV downloads don’t scale.

So I built a daily automated sync that:

- Exports my Skool members reliably

- Parses the export

- Updates Airtable every day with up-to-date member data

In this article, I’ll walk through the problem, my approach, the automation workflow, and why this makes everything downstream so much easier.

Why Sync Skool Members to Airtable?

At first glance, exporting a member list might seem trivial.

But if you want to:

- Track membership changes over time

- Segment members by tier

- Trigger further automations (emails, dashboards, churn alerts)

- Use member data in workflows outside Skool, you quickly run into limitations with manual exports

Airtable gives me:

- A structured database of members

- Reliable filters (paid vs free, onboarding status)

- Triggers for automations

- Backend that integrates with tools like n8n, Make, and Zapier

That’s why I built this automated sync.

The Challenge: No Reliable Member API

Skool doesn’t provide an official member API that I can poll daily. It does offer a CSV export — but exporting manually every day is tedious, and doing it manually doesn’t scale.

This leaves two options:

- Scraping the UI programmatically (breaks when Skool changes layout)

- Using the official CSV export and handling it reliably in automation.

I went with the second option.

The CSV export is stable, predictable, and much less likely to break when Skool changes UI details. The tradeoff is that you need a real browser session — but that’s a tradeoff I’m happy to make for reliability.

Exporting Members Using a Headless Browser

To automate the export, I use Playwright running in Python, executed from an n8n Code node via a Python API I host myself.

The workflow does the following:

- Logs into Skool using a real browser session

- Navigates to the members page for the community

- Clicks the Export button

- Captures the downloaded CSV file

- Parses and normalizes the member data before sending it downstream

Because this uses the same export button you’d click manually, it avoids undocumented APIs and brittle scraping logic.

Credentials Handling

Credentials are injected securely via environment variables. For local testing or demos, they can also be passed explicitly — but in production I always use environment variables.

Here’s the top part of the export script where credentials are resolved:

// Get credentials - priority: input → env vars → hardcoded fallback

const skoolEmail =

$input.first().json?.email ||

$env.SKOOL_EMAIL ||

'[email protected]';

const skoolPassword =

$input.first().json?.password ||

$env.SKOOL_PASSWORD ||

'your_password_here';

const skoolGroupId =

$input.first().json?.groupId ||

$env.SKOOL_GROUP_ID ||

'my-community';

// Fail fast if credentials are not configured

if (

!skoolEmail || skoolEmail === '[email protected]' ||

!skoolPassword || skoolPassword === 'your_password_here' ||

!skoolGroupId || skoolGroupId === 'my-community'

) {

throw new Error(

'Skool credentials are not configured. ' +

'Set SKOOL_EMAIL, SKOOL_PASSWORD, and SKOOL_GROUP_ID.'

);

}This setup gives me a few important properties:

- Secure by default (environment variables in production)

- Explicit failures if something is misconfigured

- Easy to reuse across environments and workflows

Once credentials are resolved, they’re passed into a Python script that launches Playwright, performs the export, and returns structured member data back to n8n.

I deliberately avoided scraping internal APIs or reverse-engineering network requests. This approach is slower than raw HTTP calls — but it’s far more reliable over time, which matters for a workflow that runs every single day.

Here’s how the daily sync works

- Trigger the automation once per day (cron schedule).

- Programmatically fetch the Skool members export.

- Normalize the CSV contents.

- Upsert (insert/update) members in Airtable.

- Handle new members and member status changes intelligently.

Because Airtable is the single source of truth for member data in this workflow, I don’t lose information or create duplicates.

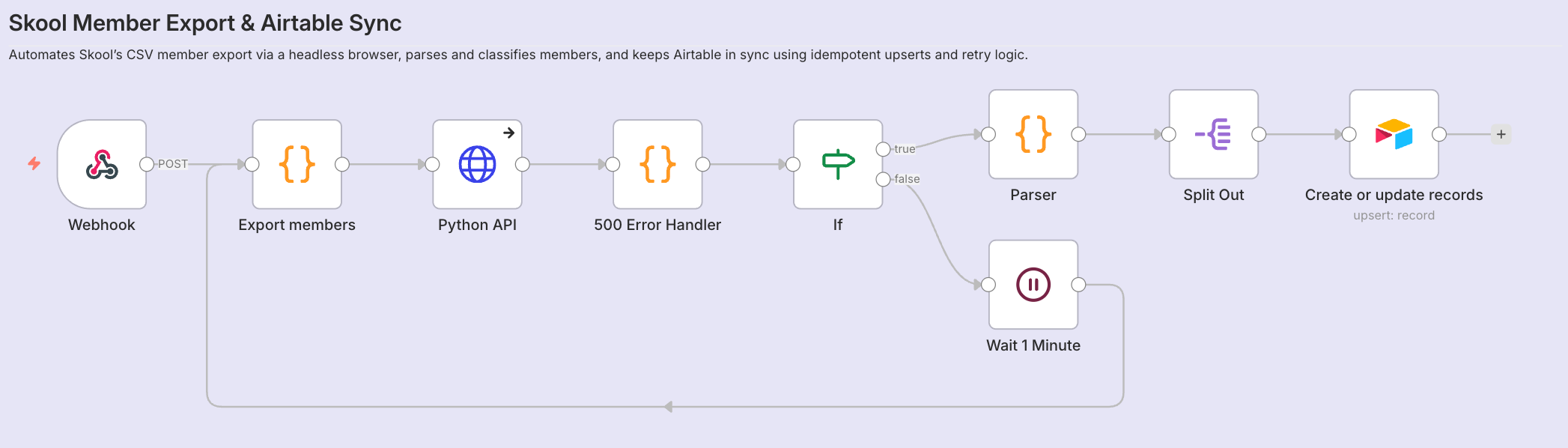

Overview of the Automation Workflow

At a high level, the workflow does this:

Daily Trigger

The process starts with a scheduled trigger — it runs once per day so I always have fresh member data.

Get Skool Members Export

Instead of scraping Skool, I use the official CSV export mechanism. This keeps the workflow robust, and means it rarely breaks even when Skool updates visually.

Parse & Normalize

Once I have the CSV, I parse it into structured fields. For each member, I normalize key attributes like:

- Full name

- Membership tier

- Join date

- Paid/free status

This data format makes it easy to upsert into Airtable.

Airtable Upsert

The automation checks if a member already exists in Airtable (by email). If the member exists, it updates fields; otherwise it creates a new record.

That way, changes such as subscription upgrades, downgrades, and churn get reflected automatically.

Example Results

Once synced, each Skool member is stored as a normalized Airtable record with fields that are easy to reason about and automate against.

Each record includes:

- FirstName and LastName (from Skool)

- FullName (derived for convenience)

- Email (used as the unique identifier)

- Tier (membership plan)

- Price and RecurringInterval (when available)

- Status (Free or Paid)

- IsPaid (boolean flag for automation logic)

Because the data is normalized up front, Airtable becomes a reliable source of truth rather than a raw export dump.

This makes it trivial to:

- Build views like Active Paid Members

- Calculate churn and growth over time

- Trigger onboarding or upgrade automations

- Drive downstream workflows (GitHub access, notifications, reporting)

What I Use to Build This

Here’s the toolchain that makes this reliable:

- n8n for orchestrating the daily automation (self-hosted)

- Headless export logic to fetch the CSV

- CSV parsing and normalization in the workflow

- Airtable to upsert member records

This system runs quietly in the background and has saved me hours every month.

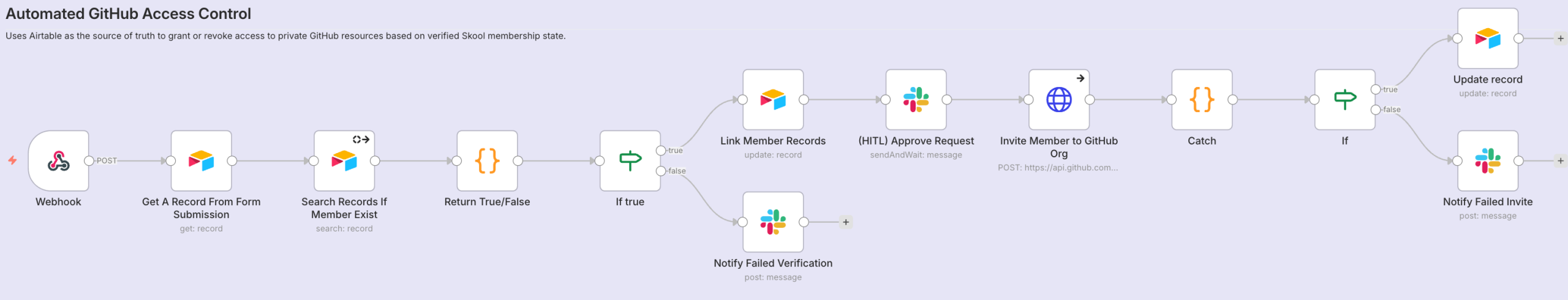

How This Feeds Into My Premium GitHub Automation

This daily member sync isn’t just for reporting.

I also use it as the source of truth for automating access to my premium GitHub organization.

When a member joins, upgrades, or loses access, that state already exists in Airtable. From there, a separate n8n workflow handles things like:

- Verifying the member exists and is paid

- Requesting manual approval where needed

- Inviting the member to a private GitHub organization

- Handling failures and retries

- Keeping access in sync over time

Because membership data is centralized, I don’t need to manually manage GitHub access or reconcile lists across platforms. The automation runs off the same data that powers my dashboards and onboarding flows.

This is one of the reasons I care so much about making the Skool export reliable — once it’s correct, everything downstream becomes simpler.

Final Thoughts

Getting your member list out of Skool on a schedule might seem niche — but once you have it in Airtable, you unlock a world of possibilities:

- Dashboards and monitoring

- Automated onboarding and follow-ups

- Integration with CRMs or email systems

- Advanced segmentation for insights

If you want access to the full workflows and infrastructure behind systems like this, I share them inside my Skool community: